Review Article Open Access

Intuitive Navigation in Computer Applications for People with Parkinsons

Matthew H Woolhouse* and Alex Zaranek

Digital Music Lab, School of the Arts, McMaster University, Canada

- Corresponding Author:

- Matthew H Woolhouse

Assistant Professor of Music Cognition and Music Theory

School of the Arts, McMaster University

1280 Main St. W., Hamilton, Ontario L8S 4M2, Canada

Tel: 905 525 9140

E-mail: woolhouse@mcmaster.ca

Received Date: March 09, 2016; Accepted Date: April 29, 2016; Published Date: May 02, 2016

Citation: Woolhouse MH, Zaranek A (2016) Intuitive Navigation in Computer Applications for People with Parkinson’s. J Biomusic Eng 4:115. doi:10.4172/2090-2719.1000115

Copyright: © 2016 Woolhouse MH, et al. This is an open-access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Visit for more related articles at Journal of Biomusical Engineering

Abstract

Research is reported concerning the development of intuitive navigation within a music-dance application designed for people with Parkinson’s disease (PD). Following a review of current research into the use of motion-sensing cameras in therapeutic contexts, we describe the method by which a relatively low-cost motion-sensing camera was coupled with a rotatable function menu. The rotatable element of the system enabled navigation of the entire menu using only two, readily performable and distinct gestures: side-swipe for function browsing; down-swipe for function selecting. To aid acceptance amongst the target audience, people with PD (who tend to be relatively elderly), the rotatable function menu was rendered as a ballerina jewelry box, with appropriate texturing and music-box style musical accompaniment. Menu implementation employed a game engine, and a software development kit allowed direct access to the camera’s functionality, enabling gesture recognition. For example, users’ side-swipe gestures spun the jewelry box (as if interacting with an object in the real world) until a desired function, presented on each facet of the box, was forward facing. Also described are features and settings, designed to ensure safe and effective use of the application, and help maintain user motivation and interest.

Keywords

Dance and music; Parkinson’s disease; Rotatable function menu; Motion-sensing camera; Gesture recognition; Game engine

Introduction

Designing an easily navigable menu system is a challenge being faced by computer- and game-application developers working with movement or cognitively impaired populations. Although the application or game itself may be the central focus, developers’ efforts can be at risk if obscured behind an unnavigable, difficult-to-use menu. The main menu is very often the first experience users have of a system; first impressions can have a lasting impact upon how a game or application is perceived, and thus the extent to which it is subsequently used. The challenge for developers is twofold. First, movement or cognitively impaired populations, such as those suffering from Parkinson’s or Alzheimer’s disease, are generally seniors [1-3] with relatively little experience of interactive gaming hardware, such as Microsoft’s Xbox One, Sony’s PlayStation 4 or Nintendo’s Wii U. While over time this age-related issue will likely reduce, in the near to midterm it represents a considerable barrier, preventing widespread adoption of interactive technologies, despite the best intentions of developers [4]. Second, gaming hardware currently available is not specifically designed for people with either movement or cognitive impediments. As a result, developers have to (1) know and take into account the particular characteristics of their user group, and (2) become adept at finding workaround solutions whereby the technology can be used intuitively and, where possible, effortlessly.

This paper describes the procedure by which researchers investigating the effects of music and dance upon people with Parkinson’s disease (PD), addressed the challenges described above, and the “rotatable function menu” that grew out of the development process. Following a review of the motion-sensing technologies currently being employed in therapeutic contexts, we briefly describe an earlier version of the application, henceforth referred to as Version 1, paying particular attention to the difficulties experienced by users when navigating the system’s menu. Woolhouse et al. [5] present a fuller description of Version 1, including a detailed exposition of the motivations for wishing to engage in this area of research namely, the creation of interactive dance technologies for people with PD1. Next, we give a detailed description of our current research in this area, the technologies utilized in the application, hardware, and software development. Our aim is to share with the research community our experiences of using cutting-edge techniques in a real-world setting that presents a unique set of problems.

Motion-Sensors in Therapeutic Contexts

For developers, the use of motion-sensing systems in therapeutic contexts can have particular appeal. First, hardware quality, costs and ubiquity have intersected at a point where scalability is now possible— developers can create sophisticated applications in the knowledge that console gaming systems are domestically widespread [14], and thus general adoption of their work is possible. Second, software development kits (SDKs) provide developers with growing class libraries with which to readily enhance their applications’ functionality [15]. For example, Microsoft’s Kinect SDK contains multiple tools designed to exploit the camera’s capabilities, such as gesture recognition, skeletal and face tracking, and body identification. Gesture recognition might enable a developer to incorporate specific body movements into a game, triggering responses from the system; body identification, derived from the Kinect’s infrared depth data, can be used to create group-based games, incorporating multiple players. Moreover, motion detectors such as Kinect and Sony’s PlayStation Camera do not require a user to wear or carry ancillary equipment or markers, thereby enabling free and unencumbered limb movement.

Over the past decade, researchers have increasingly sought to employ motion-sensing cameras intended for gaming in a variety of therapeutic contexts. Although we concentrate here on studies relating to Microsoft’s Kinect, considerable work has also been done to examine the benefits to health research of other motion-sensing devices, such as Sony’s PlayStation Camera (and its precursor, the Eye), Nintendo’s Wii Remote Plus [16-19], and smartphones (using their built-in tri-axial accelerometer and gyroscope) [20].

Released in 2010, as a peripheral device to Microsoft’s Xbox 360, the Kinect motion-sensing camera quickly gave rise to a number of rehabilitation studies. For example, in consultation with physical therapists at a Special Education School, who had identified reduced enthusiasm for rehabilitation as a typical condition among their students, Huang [21] applied a Kinect-based system to students with muscle atrophy and cerebral palsy. Of the students who used the system, Huang reported increased levels of motivation and enhanced efficiency of rehab activities, which, in turn, significantly contributed to muscle endurance and stamina. A second study focusing on cerebral palsy, conducted by Chang et al. [22], corroborated Huang’s [21] findings regarding increased levels of motivation for rehabilitation. The data of Chang et al. [22] showed that participants using their Kinect system were significantly more likely to engage in upper-limb rehabilitation, and also noted improved exercise performance during the intervention phases of their study. In due course, we will return to the important notion of motivation, and the way in which motion-sensing cameras can enhance self-discipline, resolve and purposefulness among application users.

Musculoskeletal pain, a frequent barrier to rehabilitation [23], has also attracted the attention of researchers seeking to exploit the benefits of low-cost motion-tracking devices. Schönauer et al. [24] targeted patients with chronic lower-back and neck pain within a “serious-game” framework. In contrast to generic computer-video games, serious games frequently simulate actual-world events for the purposes of addressing particular issues, for example relating to the defense, education or health-care industries [25]. A central objective is to train or educate users, often in a complex virtual environment, in order to optimize performance when entrusted with carrying out specific, detailed tasks in the real world; for a review of benefits and assessment criteria of serious games, see Connolly et al. [26], and Gee et al. [27]. The approach of Schönauer et al. [24] was to develop a painmanagement application in which the capabilities of the Kinect were integrated with a multimodal system, involving full-body motioncapture and bio-signal information (heart rate). Hence, in this scenario, the Kinect was part of a larger serious game, developed to support patients, many of whom resist rehabilitation due to the debilitating fear of pain itself [28].

The relative precision of joint-angle information derivable from the Kinect [29] enables it to be used in situations where accurate and reliable information is necessary, and, crucially, expensive and complex optical motion-capture equipment might be impractical or unaffordable, as in the home. One such situation is the rehabilitation of stroke patients, who frequently require daily exercise in order to recover joint or limb articulation [30]. Knowing and recording the degree to which a patient is able to move a particular limb may facilitate the planning of graded exercises aimed at joint-angle expansion, of an elbow or knee for example. In a study aimed at stroke survivors with hemiplegia, Sin and Lee [31] examined the effects on upper-body function, including range of motion and manual dexterity, of virtualreality training using the Kinect. In a controlled study, 20 participants undertook a six-week Kinect-based rehabilitation training program and conventional physiotherapy, while the control group, also consisting of 20 participants, underwent only physiotherapy. After the intervention, upper-body range of motion was found to be significantly greater for the Kinect group. Although confounded by the overall amount of training of both groups being unequal, Sin and Lee concluded that serious games involving motion-sensing equipment could deliver tangible benefits to those undergoing rehabilitation, in respect of both speed and degree of recovery. Saini et al. [32] present similar stroke-related research.

In contrast to the physical deficiencies discussed thus far, cognitive impairments have also been subject to studies involving motion-sensing cameras. Alzheimer’s, a chronic neurodegenerative disease accounting for between 60%-70% of all cases of dementia [33], has attracted particular attention. For example, Leone et al. [34] implemented an information and communication-technology system, incorporating a sophisticated human-computer interface. The intention was for the platform to be capable of multi-domain cognitive rehabilitation, including (1) temporal and spatial orientation, (2) visual and topographical memory, (3) verbal memory and fluency, and (4) visual and hearing attention. Using a set-top-box connected to a TV monitor with Internet connection and Kinect camera, the user was able to download specialized rehabilitation work schedules provided by a physician. In turn, performance results could be returned to the physician via the Internet for clinical evaluation. “KiMentia”, a Kinect-based application developed by De Urturi Breton et al. [35] for the cognitive and physical rehabilitation of the elderly, focused specifically on language competence. Using the Kinect’s skeletaltracking functionality, users of the system were required to complete mental tasks using physical gestures—the aim being to stimulate verbal cognition through a series of movement-coordination exercises. Moreover, KiMentia’s functionality enabled data generated in each session to be stored for future analysis, facilitating the study of motionsensing technologies and Alzheimer’s from longitudinal perspectives. For further examples of human-computer interaction in which the Kinect is used for the rehabilitation of Alzheimer’s patients, see Mandiliotis et at. [36], Sacco et al. [37], and Caroppo et al. [38].

Turning to Parkinson’s disease—the focus of our research—numerous studies have attempted to exploit the motion-sensing capabilities of the Kinect for movement rehabilitation. Realizing the need for safe, feasible and effective exercise applications appropriate for PD, Galna et al. [39] designed a therapeutic game for dynamic postural control. Given the high degree of movement variability within PD populations, care was taken to build multiple skill levels into the system: participants were trained in multi-directional reaching and stepping tasks across 12 difficulty levels, gradually increasing in complexity. And, in an approach not that dissimilar to ours, participants’ experiences were ascertained through semi-structured interviews, and analyzed for future possible development of their system. Furthermore, Galna et al. [39] determined participants’ level of immersion in the game using the Flow State Scale [40]; flow states are typically linked to enjoyment, and therefore indicative of a user’s future willingness to engage in a particular activity. Foregoing software development altogether, Pompeu et al. [41] assessed the feasibility, safety and outcomes of an off-the-shelf gaming product, Kinect Adventures!™. Participants’ clinical outcomes were assessed using a battery of tests, including Six-Minute Walk Test [42], Balance Evaluation System Test [43], Dynamic Gait Index [44] and Parkinson’s Disease Questionnaire [45]. Using these metrics, Pompeu et al. [41] noted improvements to balance and gait, cardiopulmonary aptitude, as well as subjective quality-of-life measures. Lastly, Torres et al. [46] sought to assess the extent to which the Kinect can be used for the evaluation and diagnosis of PD. In brief, their approach was to use the Kinect to detect the presence of tremors, which occur in >90% of standing patients, and >75% resting [47]. Following a survey of available technologies, Torres et al. [46] concluded that the Kinect was the most appropriate widely available motion sensor, due to it being “…the most adjusted to the criteria that allow for the objective and impartial diagnosis and evolution of PD, specifically in the evaluation of motor tremor-like symptoms”.

In sum, as the foregoing illustrates, since 2010 (the year in which Microsoft launched its motion-sensing camera) numerous projects have sought to capitalize on (1) the Kinect’s widespread availability, (2) its comparative low cost, and (3) the relative accuracy of its jointtracking software, derivable through the SDK. However, despite the rapid expansion of research employing the Kinect in a range of therapeutic and diagnostic contexts, arguably significant work remains to be done with respect to optimizing the technology for the needs of specific communities. Moreover, in 2014 Microsoft released a Kinect camera with upgraded functionality (e.g. 60% wider field of vision), affording researchers new development opportunities. In particular, Microsoft made improvements to gesture recognition and tracking, which we have exploited in our application. Before turning to this research, however, there now follows a brief description of the earlier iteration of our system referred to above, Version 1.

Version 1

Woolhouse et al. [5] presented a Kinect-based system, designed to complement a dance-intervention program for people with PD located within the vicinity of Hamilton, Ontario, Canada. Coordinated by program manager Jody Van De Klippe, Hamilton City Ballet (HCB) Dance for Parkinson’s program is one of a growing number of therapeutic dance programs within Canada. Each semester consists of six bi-weekly classes that take place in a spacious community hall with ramp access, accommodating up to 40 students per class. The main purpose of the authors’ initial collaboration with HCB Dance for Parkinson’s program was to pilot-test a technological dance system, designed for everyday home use; a goal was to enable people with PD to engage in dance on a daily basis rather than merely when in class (once every two weeks).

Version 1 of the application presented users with a series of videos in which the instructor, Melania Pawliw (co-founder and Artistic Director of HCB), performed various seated dances, taken from actual class routines. The object of the application was for users to match their hand positions, tracked by a Kinect camera mounted below a 27-inch monitor, to those of the instructor in the videos; a user’s hands were represented on screen as circles, which would glow when positioned over the instructor’s on-screen hands. The application’s hierarchical menu was navigated by moving and gripping an on-screen hand icon, which, in addition to needing a degree of agility, required relatively accurate lateral arm movements. Moreover, detection of the hand by the Kinect involved users holding either arm in a forward-extended position, which some understandably found effortful and awkward. In particular, in order to select on-screen menu options, users had to perform a gripping motion with either hand, as if clenching a virtual object. Needless to say, given the hand-movement impairments that PD can cause [48], some users found this to be problematic, leading to instances in which caregivers were required to provide assistance.

Confirmed in the feedback Woolhouse et al. [5] received from users with PD field-testing Version 1 in their homes, the problems outlined above prompted us to rethink the system’s menu-navigation actions, as well as the structural design of the menu itself. Starting in fall 2014, the research team began developing Version 2 of the application by asking two related questions. First, what are the most simple limb gestures, detectable by the Kinect, that people with PD can perform with relative ease? Second, what are the fewest number of gestures required to navigate the entire menu, and how might this influence the design of its hierarchical structure? Our answers to these and other related questions constitute the remainder of this paper; we begin by providing an overview of Version 2 of the application, before proceeding to a detailed description of the features of its menu system, which were designed with a specific population in mind, namely those with PD.

Version 2

Overview: In addition to the views outlined above regarding menu navigation, users also raised concerns about the application’s general interactivity: the battery of dance videos were relatively quickly exhausted, leading some to desire greater novelty within the application. Moreover, once videoed, a dance remains fixed and cannot be modified or adapted to suit the specific requirements of a particular user. Given PD’s progressive nature, movement abilities vary considerably, from those with only minor symptoms to those who require constant care [49]. And for a given individual with PD, symptoms such as bradykinesia, akinesia, and hypokinesia can fluctuate from day to day [50]. As a result, in the application’s subsequent iteration, Version 2, avatars animated with biological data (derived from a high-quality, passive-marker optical motion-capture system) were employed, which enabled us to create a virtual partner with “artificial dance intelligence” (Figure 1).

Depending upon a user’s abilities, the application was designed to select avatar dance movements of different magnitudes based on a comparison between the Kinect’s real-time skeleton-tracking and an optimal gesture score (stored in a gesture library provided by the SDK). Should a user’s movements have been restricted, due, for example, to the effects of the disease, the avatar’s gestures became similarly limited, and vice versa. Upon entering a virtual studio (of which there were a number of “unlockable” types: forest, beach, cruise liner, etc.), the user was asked whether he/she was seated comfortably, and in a sufficiently safe environment to commence dancing. A real-time moving silhouette of the user, produced using the Kinect’s depth data, was integrated into the application as a sub-window (Figure 1, bottom right). In addition, during each dance, a user’s performance per gesture was shown via an incremental progress bar (Figure 1, left), which shifted to bronze, silver or gold depending upon movement accuracy. Following completion of each dance, a summary of the user’s performance was displayed as a bar graph, showing the relative proportions of gestures executed at the bronze, silver or gold levels. Virtual medals and trophies could be won depending upon performance and how frequently a user engaged with the system; in-game reward systems have been shown to be motivational in nature, encouraging greater application engagement [51-53]. We now turn to the menu system, through which the application outlined above was accessed.

Menu concept: As set out above, a key goal was to design a menu, navigable by simple limb gestures that could be readily detected by the Kinect, and that most people with PD could perform with relative ease. In addition, given that many people with PD are seniors [1], we sought to develop a menu that required little or no knowledge of gaming conventions, a key issue with respect to game development identified by Graham McAllister and Gareth White in the landmark computergame publication Evaluating User Experience in Games [54]:

Another example of accessibility is navigation flow through menus. Proficient gamers are used to a certain set of conventions for menu screens, such as where to find controller options, etc. but observing nonexpert players can reveal that this is a learnt association that may be at odds with the assumptions novice players bring with them (p.120).

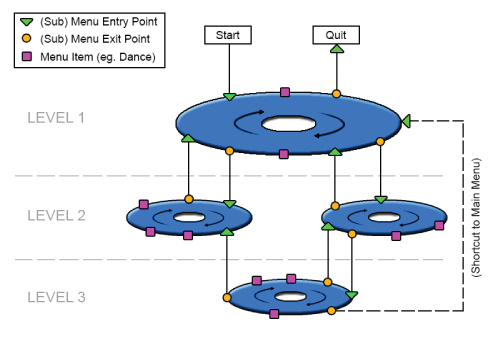

In order for navigation to be achieved using only two gestures (the minimum number we hypothesized to be necessary to move through the system), the menu was conceived as a series of hierarchical, rotatable discs, each representing a submenu containing a set of options, and connected to one another via entry and exit points. This conception enabled the following: once within a submenu, any item could be arrived at with a single gesture, one that spun the disc (i.e. submenu) upon its center. A second, different gesture could then be used to select a particular option within a submenu, for example, a dance, a toggleable feature such rhythmic auditory stimulation (described below), or a submenu entry/exit point. Figure 2 illustrates in graphical form the application menu conceived of as a series of hierarchical, rotatable submenus. Three levels are present: Level 1 is the main menu; Levels 2 and 3 are submenus, which could be, for example, a collection of dance routines selectable by the user. Also shown is a shortcut (right), enabling a user to navigate quickly between distant, nonadjacent points within the menu.

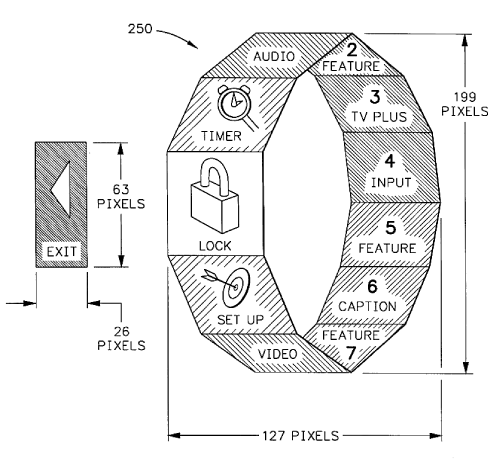

The conception described above and shown in Figure 2 could be viewed as a type of three-dimensional “pie” or “radial” menu, A pie menu is constructed from a circle divided into equal “pie slices”; each slice represents a menu item positioned on the circumference of the circle. The purpose of a pie menu is to provide a smooth, robust gestural method of interaction via a system’s graphical user interface, convenient for both novice and expert users [55]. And, as depicted in the adjacent levels within Figure 2, a slice may lead to another hierarchically embedded pie submenu. More specifically, however, our approach could be considered synonymous with the “rotatable function menu”, patented by Jeffrey Cove, William Gray, and Ernesto Villalobos in 2001, a design which is “substantially ring- or wheel-shaped” and comprised of a “plurality of facets … arrayed circumferentially along an outer surface of the menu”2 [56]. Moreover, as with our concept, Cove, Gray and Villalobos envisaged that a user could rotate their menu until the facet with the desired function was nearest in virtual space, at which point it could be selected (Figure 3).

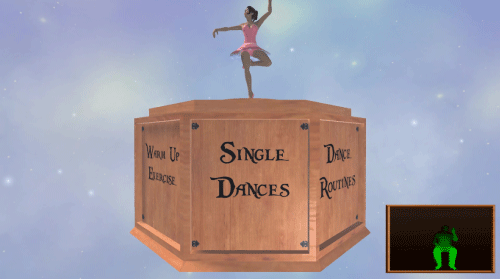

Motivated by a desire to create an application with positive associations and familiar imagery, we undertook to render the rotatable function menu as a ballerina jewelry box. Typically, upon opening this type of box, a toy ballerina begins to rotate, accompanied by music produced by the tuned teeth, or lamellae, of a steel comb plucked by set of pins, otherwise known as a “musical box” [57]. The decision to model a key aspect of the user interface (UI) on a ballerina jewellery box fitted well with three aspects of the application. First, our application featured dance routines derived from ballet, thus matching the ballerina atop of these types of jewelry boxes. Second, having conceived of the menu as a series of hierarchical, rotatable discs, we envisage that our jewelry boxcum- menu could be made to spin by means of a simple gesture detected by the Kinect. And third, the box could be constructed from multiple facets, each carrying a menu function. In addition, we sought to heighten the potential sense of affinity of users to the jewellery box by giving it an authentic, natural wooden texture [58]. Finally, in an endeavour to give the UI an otherworldly, enchanting quality, the jewelry box was made to float in virtual space, as if not subject to real-world forces, such as gravity. The result, shown in Figure 4, was an octagonal rotatable function menu in the form of a wooden ballerina jewellery box that could be spun until a desired item was forward facing.

After consulting with people with PD in HCB’s Dance for Parkinson’s program, a swipe gesture in front of the body was determined to be readily performable by most users, either from left to right with the left hand, or right to left with the right hand. Moreover, this gesture had the advantage of being compatible with the physics-based movement of the UI. Recall that the jewelry box was designed to rotate in virtual space— we surmised that a side-swipe gesture would provide users with a sense that they were spinning an object in the real world, which would, in theory, enable them to interact intuitively with the application’s menu. The “select-item” gesture decided upon was a downward swipe, made with either hand held in front of the body, from torso to lap. This had the advantage of being easily performable, similar in style to the sideswipe gesture, but sufficiently distinct so as not to be miss-identified by the Kinect SDK’s gesture-recognition system. Moreover, we anticipated that the relatively rapid swipe gestures would be beneficial due to the fact that resting-tremor symptoms are typically reduced during limb movement. Which is to say, navigation through the menu did not rely upon fine-grained motor actions, but rather simple broad-scale motions executable by most users.

Implementation: The application operated on a “state” machine that tracked the current submenu and option the user was viewing. When the Kinect SDK detected a registered gesture, the state machine’s current position in the menu was updated appropriately. Each menu state individually stored the texture information to be loaded onto each face of the jewelry box, and the action to be performed once an option had been selected. When rotated, the menu updated dynamically by loading new textures on the hidden, upcoming faces to match the appropriate option of the current-view index in the current menu state. Transition to a new submenu was achieved by loading a new menu state. Main menu or submenu items were displayed in rotating order irrespective of cardinality. For example, if a submenu contained more than eight items (i.e. greater than the number of menu facets), each item was made to appear in successive order. This was achieved by loading new textures on the hidden upcoming faces to match the appropriate option, theoretically enabling an unlimited number of menu options to be displayed on the eight sides of the jewellery box.

Features and settings: In addition to the intuitive physics-based concept of the rotating jewelry box, the menu contained various features and settings designed (1) to ensure safe and effective use of the application, and (2) to help maintain user motivation and interest. On booting the system for the first time, following the opening credits, the application automatically navigated to the instruction submenu. The instruction submenu was designed to assist users by providing short textual descriptions and voiceovers outlining specific methods for using the system. Instructional videos were also displayed, providing users with visual information on features of the system. For example, in the case of side-swipe gestures required for navigation, a video displayed a person repeatedly performing the gesture action. The aim was to prevent confusion with respect to how a specific gesture should ideally be performed, particularly in cases where text and voice alone might be ambiguous. Navigation through the instruction submenu involved identical actions to those required within other submenus, thereby helping to familiarize users with essential system-actions from the outset. An exit option within this submenu enabled users to navigate back to the main menu; henceforth, viewing the instruction submenu became optional.

A settings submenu, accessible via the main menu, enabled users to select various parameters of the system. For example, the settings submenu included several themed dance studios, such as “cruise ship”, “forest backdrop”, “beach scene”, and “palm court”. Once within the studio submenu, the name and image of each studio appeared on successive faces of the jewellery box; the user could swipe to whichever studio they desired, and, as described above, select it by swiping downwards. An image of a user’s current studio appeared on the studio submenu face, thereby reminding them of their chosen dance venue. Initially some dance studios were locked, indicated by a padlock symbol appearing over the image of that particular studio. If a user selected a locked studio, on-screen text was displayed stating the prerequisites for unlocking it, such as performing a particular dance a set number of times.

Also within the setting submenu, users were able to select whether to navigate the application with the aid of rhythmic auditory stimulation (RAS), a technique for rehabilitating intrinsically rhythmical biological movements, such as walking. In brief, RAS consists of auditory stimuli presented in more-or-less isochronous rhythmical patterns, to which patients have to synchronize their movements. The technique has been found to be highly effective with respect to PD. For example, training patients with RAS consisting of audiotapes with metronome-pulse patterns, Thaut et al. [59] significantly improved gait initiation, velocity, stride length, and step cadence in comparison to similarly matched non-RAS trained patients. For pioneering work on Parkinsonian gait and RAS, see McIntosh et al. [60]; Thaut and Abiru [61] provide a review of RAS research in the rehabilitation of movement disorders. Our approach was to embed RAS at different tempi within the menuaccompaniment music—delicate musical-box style music, sounding as if produced by a steel comb plucked by set of pins. The RAS provided isochronous accents at the selected tempo within the menuaccompanying music, with the aim of assisting the initiation of users’ swipe gestures.

People with PD are at increased risk of injury due to postural instability, random, disconnected stride times and gait freezing [62]. Consequently, we included a safety feature designed to assist a user in instances of incapacitation, such as falling from their chair while using the application. Using the Kinect SDK’s gesture recognition system, if a user ceased to remain seated, for example due either to walking away or falling, the application automatically paused and asked via voice and onscreen text, “Do you require assistance, yes or no?” Using a voicerecognition library within the SDK, users were able to answer in the affirmative or negative. If a user answered “yes” to this question, a further prompt enquired whether they wished for an emergency text message to be sent to their primary caregiver. Accessible in the settings submenu, this feature enabled users to input the contact number of their primary caregiver. Using a short message service (SMS) gateway company, our system was able to transmit SMSs to caregivers via a telecommunications network for a small fee [63]. If a user answered “no” to any of the assistance prompts, the system returned to its paused state. This safety feature was optional, and could be toggled on and off from the settings submenu as required.

With respect to maintaining user motivation and interest, the application included an achievement-tracking component that led to the accumulation of virtual trophies upon completion of certain objectives, e.g. number of dances performed in a particular studio. Trophies were collected and housed within a cabinet in a virtual trophy room, accessible via the main menu. Navigation to and inspection of trophies within the cabinet required the same swipe gestures as described above, thereby integrating this aspect of the application into the menu as a whole. For example, when facing the cabinet, swiping sideways enabled users to move between trophies; a simple downward swipe with either hand allowed users to inspect each trophy individually (a selected trophy levitated slightly and rotated). Locked trophies appeared in transparent “ghost” form within the cabinet. Selecting a locked trophy displayed on-screen text, informing users how it could be unlocked, e.g. by performing a certain number of gold-level gestures within a dance. In a similar manner to the individual submenus, navigation through the trophy cabinet was itself rotatable—swiping the last trophy sideways looped users back to the first trophy within the cabinet. As a result, trophy navigation required the same two gestures as used elsewhere in the menu: side swipe to browse through options; down swipe to select. Users could quit the trophy room by navigating to a location in the cabinet that displayed the word “Exit”. Selecting “Exit” returned users to the main menu, which, as mentioned previously, provided access to the application’s range of dance activities and exercises.

Summary

This paper has described the philosophy and development process underpinning the creation of a music-dance application for people with PD; particular emphasis has been placed on menu navigation. In addition to being predominantly from a generation with limited exposure to video gaming, people with PD suffer from a range of motor disabilities that can impact their willingness to engage with interactive motion-sensing technologies. Our approach to these potential difficulties has been multipronged. First, menu navigation was actuated using only two simple swipe gestures (sideways and downwards), identified by users of a previous version of the application as being readily executable by most people with PD. Second, we conceived of the menu as a set of revolving discs, each representing a submenu with a set of options, and connected to one another via entry and exit points; in short, a rotatable function menu. Along the way, effort was taken to make the look and feel of the menu attractive, and its use as intuitive as possible. For example, we cast the rotatable function menu as a child’s traditional jewelry box, composed from natural wood, and with an appropriate music-box style accompaniment. From the perspective of ease-of-use, the side-swipe gestures were compatible with the physicsbased movement of the UI, providing users with an intuitive sense that they were spinning a real-world object.

Music and dance are “rich” cultural products, to the extent that they can not only motivate, engage and entertain users, but also elicit complex brain responses, seemingly of great benefit to people with neurological deficits, such as those with PD [64,65]. If these benefits are to reach a wider audience, and to be enjoyed outside the confines of specialist clinics and the practices of enlightened therapists, welldesigned technological applications will surely have to play their part. The extent to which we have been successful in this endeavour of course remains to be seen; however, we believe that the foregoing represents a significant step in making motion-sensing technologies both accessible and fun for a hitherto relatively excluded population.

Acknowledgement

This project was supported with a Forward With Integrity grant from the Office of the President, McMaster University, and an Arts Research Board grant awarded to the first author. In addition, the authors would like to thank the Office of Vice President of Research and International Affairs, McMaster University, for their financial support. We would also like to acknowledge Mitch Errygers and Nick Rogers who assisted with programming, and Jotthi Bansal and Maddie Cox for their significant contributions to the project. Last, but by no means least, we would also like to thank the Founders and Artistic Directors of HCB, Melania Pawliw and Max Ratevosian, and program manager of HCB Dance for Parkinson’s, Jody Van De Klippe, for their kindness and dedication to the project. The image in Figure 3 is reproduced by kind permission of Jeffrey M. Cove, part inventor of the rotatable function menu.

References

- Van Den Eeden SK, Tanner CM, Bernstein AL, Fross RD, Leimpeter A, et al. (2003) Incidence of Parkinson’s disease: variation by age, gender, and race/ethnicity.Am J Epidemiol157: 1015-1022.

- Evans DA, Funkenstein HH, Albert MS, Scherr PA, Cook NR, et al. (1989) Prevalence of Alzheimer's disease in a community population of older persons: higher than previously reported.JAMA262: 2551-2556.

- Quinn N, Critchley P, Marsden CD (1987) Young onset Parkinson’s disease. Movement Disorders 2: 73-91.

- Bierre K, Chetwynd J, Ellis B, Hinn DM, Ludi S, et al. (2005) Game not over: Accessibility issues in video games. In Proc. of the 3rd International Conference on Universal Access in Human-Computer Interaction.

- Woolhouse MH, Williams S, Zheng S, General A (2015) Creating technology-based dance activities for people with Parkinson’s. International Journal of Health, Wellness and Society 5: 107-121.

- Yao SC, Angela DH, Michael JT (2013) “An Evidence-Based Osteopathic Approach to Parkinson Disease.” Osteopathic Family Physician 5: 96-101.

- Dorsey ER, Radu C, Joel PT, Kevin MB, Robert GH, et al. (2007) “Projected Number of People with Parkinson Disease in the Most Populous Nations, 2005 through 2030.” Neurology 68: 384-386.

- De L, Lonneke ML, Monique B (2006) “Epidemiology of Parkinson’s Disease.” Lancet Neurol 5: 525-535.

- Poewe W (2006) The natural history of Parkinson’s disease. Journal of Neurology 253: vii2-vii6.

- Jankovic J (2008) Parkinson’s disease: Clinical features and diagnosis. J Neurol Neurosurg Psychiatry 79: 368-376.

- National Collaborating Centre for Chronic Conditions (2006) “Symptomatic Pharmacological Therapy in Parkinson’s Disease.” London: Royal College of Physicians (UK).

- Kringelbach ML, Ned J, Sarah LFO, Tipu ZA (2007) “Translational Principles of Deep Brain Stimulation.” Nature Reviews Neuroscience 8: 623-635.

- Bronstein JM, Michele T, Ron LA, Andres ML, Jens V, et al. (2011) “Deep Brain Stimulation for Parkinson Disease: An Expert Consensus and Review of Key Issues.” Archives of Neurology 68: 165.

- Entertainment Software Association (2014) Annual Report.

- Zhang Z (2012) Microsoft Kinect sensor and its effect.MultiMedia, IEEE19: 4-10.

- Haik J, Tessone A, Nota A, Mendes D, Raz L, et al. (2006) The use of video capture virtual reality in burn rehabilitation: the possibilities. J Burn Care Res27: 195-197.

- Rand D, Kizony R, Weiss PTL (2008) The Sony PlayStation II EyeToy: low-cost virtual reality for use in rehabilitation.J Neurol PhysTher 32: 155-163.

- Bateni H (2012) Changes in balance in older adults based on use of physical therapy vs the Wii Fit gaming system: a preliminary study.Physiotherapy98: 211-216.

- Jelsma J, Pronk M, Ferguson G, Jelsma-Smit D (2013) The effect of the Nintendo Wii Fit on balance control and gross motor function of children with spastic hemiplegic cerebral palsy. Developmental Neurorehabilitation16: 27-37.

- Ellis RJ, Ng YS, Zhu S, Tan DM, Anderson B, et al. (2015) A validated smartphone-based assessment of gait and gait variability in Parkinson’s disease.PloS One10: e0141694.

- Huang JD (2011) Kinerehab: a Kinect-based system for physical rehabilitation: a pilot study for young adults with motor disabilities. Proceedings of the 13th International ACM SIGACCESS Conference on Computers and Accessibility, Scotland, UK.

- Chang YJ, Han WY, Tsai YC (2013) A Kinect-based upper limb rehabilitation system to assist people with cerebral palsy. Research in Developmental Disabilities34: 3654-3659.

- Patel S, Greasley K, Watson PJ (2007) Barriers to rehabilitation and return to work for unemployed chronic pain patients: a qualitative study. Eur J Pain11: 831-840.

- Schönauer C, Pintaric T, Kaufmann H, Jansen-Kosterink S, Vollenbroek-Hutten M (2011) Chronic pain rehabilitation with a serious game using multimodal input. In Virtual Rehabilitation (ICVR), 2011 International Conference on IEEE.

- Michael DR, Chen SL (2005) Serious games: Games that educate, train, and inform. MuskaandLipman/Premier-Trade.

- Connolly TM, Boyle EA, MacArthur E, Hainey T, Boyle JM (2012) A systematic literature review of empirical evidence on computer games and serious games.Computers and Education59: 661-686.

- Gee D, Chu MW, Blimke S, Rockwell G, Gouglas S, et al. (2014) Assessing serious games: The GRAND assessment framework.Digital Studies/Le champnumérique 4.

- Crombez G, Vlaeyen JW, Heuts PH, Lysens R (1999) Pain-related fear is more disabling than pain itself: evidence on the role of pain-related fear in chronic back pain disability. Pain80: 329-339.

- Fernández-Baena A, Susin A, Lligadas X (2012) Biomechanical validation of upper-body and lower-body joint movements of Kinect motion capture data for rehabilitation treatments. In Intelligent Networking and Collaborative Systems (INCoS), 2012 4th International Conference on IEEE.

- Kwakkel G, van Peppen R, Wagenaar RC, Dauphinee SW, Richards C, et al. (2004) Effects of augmented exercise therapy time after stroke a meta-analysis. Stroke35: 2529-2539.

- Sin H, Lee G (2013) Additional virtual reality training using Xbox Kinect in stroke survivors with hemiplegia. Am J Phys Med Rehabil 92: 871-880.

- Saini S, Rambli DRA, Sulaiman S, Zakaria MN, Shukri SRM (2012) A low-cost game framework for a home-based stroke rehabilitation system. In Computer and Information Science (ICCIS), 2012 International Conference on IEEE.

- World Health Organization (2015). Dementia, Fact sheet N362.

- Leone A, Caroppo A, Siciliano P (2014) A natural user-interface based platform for cognitive rehabilitation in Alzheimer’s disease patients. Gerontechnology13: 244.

- De Urturi Breton ZS, Zapirain BG, Zorrilla AM (2012) Kimentia: Kinect based tool to help cognitive stimulation for individuals with dementia. In e-Health Networking, Applications and Services (Healthcom), 2012 IEEE 14th International Conference on IEEE.

- Mandiliotis D, Toumpas K, Kyprioti K, Kaza K, Barroso J, et al. (2013) Symbiosis: An Innovative Human-Computer Interaction Environment for Alzheimer’s Support. In Universal Access in Human-Computer Interaction. User and Context Diversity, Springer Berlin Heidelberg.

- Sacco G, Sadoun GB, Piano J, Foulon P, Robert P (2014) AZ@ GAME: Alzheimer and associated pathologies game for autonomy maintenance evaluation. Gerontechnology13: 275.

- Caroppo A, Leone A, Siciliano P, Sancarlo D, D’Onofrio G, et al. (2014) Cognitive home rehabilitation in Alzheimer’s disease patients by a virtual personal trainer. In Ambient Assisted Living, Springer International Publishing.

- Galna B, Jackson D, Schofield G, McNaney R, Webster M, et al. (2014) Retraining function in people with Parkinson’s disease using the Microsoft Kinect: game design and pilot testing. J NeuroengRehabil11: 60.

- Jackson SA, Marsh HW (1996) Development and validation of a scale to measure optimal experience: The Flow State Scale. Journal of Sport and Exercise Psychology18: 17-35.

- Pompeu JE, Arduini LA, Botelho AR, Fonseca MBF, Pompeu SMAA, et al. (2014) Feasibility, safety and outcomes of playing Kinect Adventures!™ for people with Parkinson's disease: a pilot study. Physiotherapy100: 162-168.

- Falvo MJ, Earhart GM (2009) Six-minute walk distance in persons with Parkinson’s disease: A hierarchical regression model. Archives of Physical Medicine and Rehabilitation90: 1004-1008.

- Horak FB, Wrisley DM, Frank J (2009) The balance evaluation systems test (BESTest) to differentiate balance deficits. Physical Therapy89: 484-498.

- Huang SL, Hsieh CL, Wu RM, Tai CH, Lin CH, et al. (2011) Minimal detectable change of the Timed “Up and Go” Test and the Dynamic Gait Index in people with Parkinson’s disease. PhysTher91: 114-121.

- Jenkinson C, Fitzpatrick RAY, Peto VIV, Greenhall R, Hyman N (1997) The Parkinson’s disease questionnaire (PDQ-39): development and validation of a Parkinson’s disease summary index score. Age and Ageing26: 353-357.

- Torres R, Huerta M, Clotet R, González R, Sánchez LE, et al. (2015) A Kinect based approach to assist in the diagnosis and quantification of Parkinson’s disease. In VI Latin American Congress on Biomedical Engineering CLAIB 2014, Paraná, Argentina 29, 30 and 31 October 2014, Springer International Publishing.

- Koller WC, Vetere-Overfield B, Barter R (1989) Tremors in early Parkinson’s disease. Clinical Neuropharmacology 12: 293-297.

- Hoshiyama M, Kaneoke Y, Koike Y, Takahashi A, Watanabe S (1994) Hypokinesia of associated movement in Parkinson's disease: a symptom in early stages of the disease.J Neurol241: 517-521.

- Rahman S, Griffin HJ, Quinn NP, Jahanshahi M (2008) Quality of life in Parkinson’s disease: the relative importance of the symptoms.Movement Disorders 23: 1428-1434.

- Marsden CD, Parkes JD, Quinn N (1982) Fluctuations of disability in Parkinson’s disease: clinical aspects.Movement Disorders2: 96-122.

- Domínguez A, Saenz-de-Navarrete J, De-Marcos L, Fernández-Sanz L, Pagés C, et al. (2013) Gamifying learning experiences: Practical implications and outcomes.Computers and Education63: 380-392.

- Westwood D, Griffiths MD (2010) The role of structural characteristics in video-game play motivation: A Q-methodology study.CyberpsycholBehav Soc Netw13: 581-585.

- Kebritchi M (2008) Examining the pedagogical foundations of modern educational computer games.Computers and Education 51: 1729-1743.

- McAllister G, White GR (2010) Video game development and user experience. In Evaluating User Experience in Games,Springer London.

- Hopkins D (1991) The design and implementation of pie menus.Dr. Dobb’s Journal 16: 16-26.

- Cove JM, Gray WS, Villalobos ES (2001) U.S. Patent No. 6,266,098. Washington, DC: U.S. Patent and Trademark Office, USA.

- Clark JE (1961)Musical boxes: a history and an appreciation, London.

- Overvliet KE, Soto-Faraco S (2011) I can't believe this isn't wood! An investigation in the perception of naturalness. Acta Psychologica 136: 95-111.

- Thaut MH, McIntosh GC, Rice RR, Miller RA, Rathbun J, et al. (1996) Rhythmic auditory stimulation in gait training for Parkinson’s disease patients. Movement Disorders 11: 193-200.

- McIntosh GC, Brown SH, Rice RR, Thaut MH (1997) Rhythmic auditory-motor facilitation of gait patterns in patients with Parkinson's disease. J Neurol Neurosurg Psychiatry 62: 22-26.

- Thaut MH, Abiru M (2010) Rhythmic auditory stimulation in rehabilitation of movement disorders: A review of current research. Music Perception 27: 263-269.

- Bloem BR, Hausdorff JM, Visser JE, Giladi N (2004) Falls and freezing of gait in Parkinson’s disease: a review of two interconnected, episodic phenomena. Movement Disorders 19: 871-884.

- Katankar VK, Thakare VM (2010) Short message service using SMS gateway. International Journal on Computer Science and Engineering 2: 1487-1491.

- Earhart GM (2009) Dance as therapy for individuals with Parkinson’s disease. Eur J PhysRehabil Med 45: 231-238.

- Hackney ME, Kantorovich S, Earhart GM (2007) A study on the effects of Argentine tango as a form of partnered dance for those with Parkinson disease and the healthy elderly.American Journal of Dance Therapy29: 109-127.

Relevant Topics

Recommended Journals

Article Tools

Article Usage

- Total views: 11475

- [From(publication date):

June-2016 - Sep 03, 2025] - Breakdown by view type

- HTML page views : 10464

- PDF downloads : 1011