Explainable AI in Brain Cancer Diagnosis: Interpreting MRI-Based Deep Neural Networks for Clinical Decision Support

*Corresponding Author: Ayesha Rahman, Department of Biomedical Engineering, Bangladesh University of Engineering and Technology (BUET), Dhaka, Bangladesh, Email: ayesha_r6340@gmail.comReceived Date: Mar 01, 2025 / Accepted Date: Mar 31, 2025 / Published Date: Mar 31, 2025

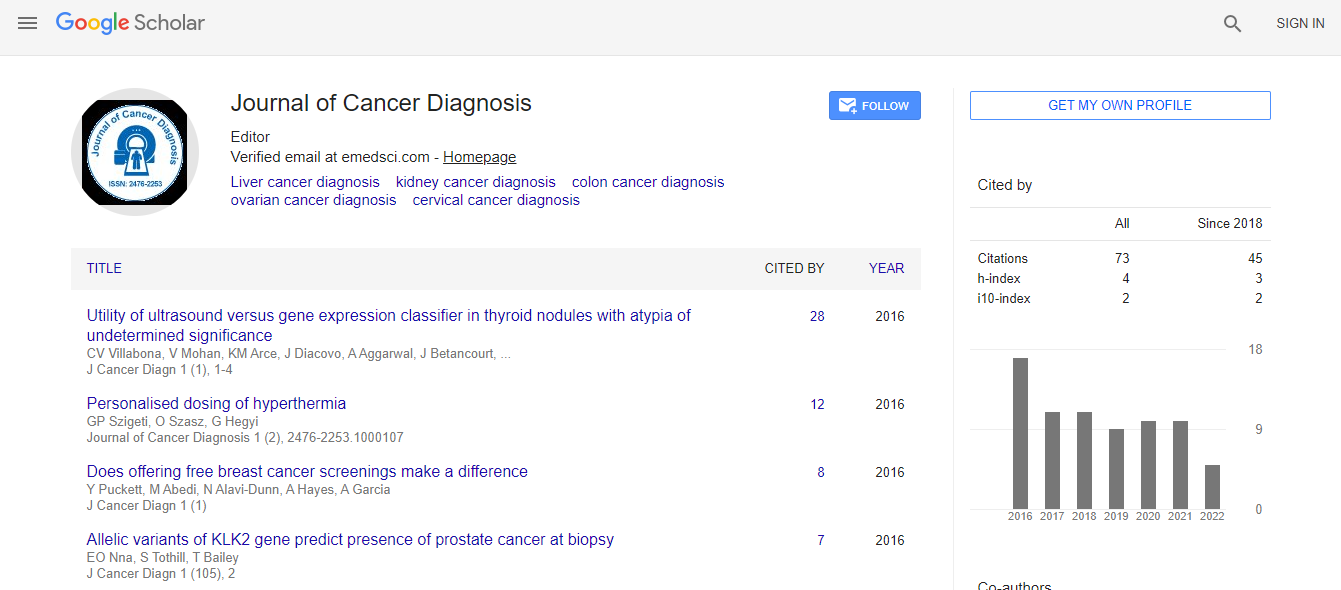

Citation: Ayesha R (2025) Explainable AI in Brain Cancer Diagnosis: InterpretingMRI-Based Deep Neural Networks for Clinical Decision Support. J Cancer Diagn9: 287.

Copyright: © 2025 Ayesha R. This is an open-access article distributed under theterms of the Creative Commons Attribution License, which permits unrestricteduse, distribution, and reproduction in any medium, provided the original author andsource are credited.

Abstract

The integration of artificial intelligence (AI) into medical imaging has led to significant improvements in brain cancer detection, particularly through the use of deep neural networks (DNNs) on magnetic resonance imaging (MRI) data. However, the “black box” nature of these models has raised concerns regarding their interpretability and reliability in clinical settings. This article reviews the state of explainable AI (XAI) techniques applied to MRIbased brain tumor diagnosis, focusing on the interpretation of deep learning outputs for clinical decision support. By emphasizing transparency, accountability, and clinician trust, explainable models can bridge the gap between algorithmic prediction and medical reasoning, fostering safe and ethical AI adoption in neuro-oncology

Spanish

Spanish  Chinese

Chinese  Russian

Russian  German

German  French

French  Japanese

Japanese  Portuguese

Portuguese  Hindi

Hindi